Simulating confounders

Let's simulate a confounding relationship now, and see what happens when we test for association. For simplicity we'll use continuous variables and linear regression.

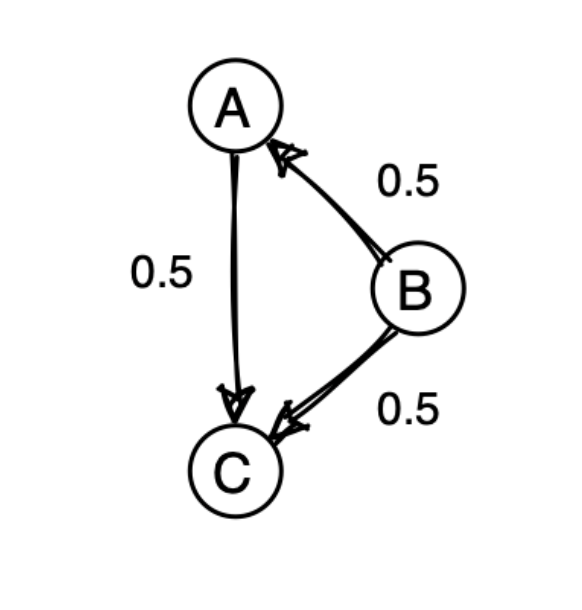

Let's imagine we are interested in the effect of a variable and an outcome variable . But there is a confounder . What does that do to our association?

We'll simulate a classic confounding relationship like this:

So affects both the predictor and the outcome .

Simulating

We'll make this as simple as possible, by making every variable be normally distributed. And all the effect sizes will be 0.5 as indicated in the diagram.

Let's start now, simulating data on samples, say.

N = 1000

First, since has no edges coming into it, we can simulate it simply by randomly drawing from a normal distribution:

B = rnorm( N, mean = 0, sd = 1 )

Second, since has only one arrow into it (from ), we camn simulate it as plus some noise. To keep things simple, we'll add 'just enough' noise so it has variance again:

A = 0.5 * B + rnorm( N, mean = 0, sd = sqrt( 0.75 ))

Why the square root of 0.75 here?

Well, has variance , so has variance . If we want to have variance we have to add on something with variance .

Finally, let's simulate . It ought to be made up of a contribution from , a contribution from , and some additional noise:

How much noise? Again, the contributions from and each have variance . But they also covary which has to be taken account of. The correct formula works out to be

The covariance is 0.5 so this works out as 0.25:

C = 0.5*A + 0.5*B + rnorm( N, mean = 0, sd = sqrt( 0.25 ))

Let's check this now by computing the covariance between variables.

simulated_data = tibble(

A = A,

B = B,

C = C

)

cov( simulated_data )

You should see something like this:

> cov( simulated_data )

A B C

A 1.0396708 0.5128317 0.7698858

B 0.5128317 1.0058647 0.7557131

C 0.7698858 0.7557131 1.0113318

Naive testing for association

Now let's fit our linear regression model of the outcome variable on :

fit1 = lm( C ~ A )

coeffs1 = summary(fit1)$coeff

print( coeffs1 )

What estimate do you get for the effect of on ? Does it capture the causal effect? Is it too high? Too low?

Testing for association controlling for confounding

The great thing about regression models is they make it easy to control for confounders - if only yuou have measured them! Here is another linear regression fit that simultaneously fits both amd as predictors:

fit2 = lm( C ~ A + B )

coeffs2 = summary(fit2)$coeff

print( coeffs2 )

What estimate do you get for the effect of on ? Does it capture the causal effect? Is it too high? Too low?

Go back and re-simulate the data but having a true effect size of zero between and . What happens to the estimates now?