Simulating confounders

Let's simulate a confounding relationship now, and see what happens when we test for association. For simplicity we'll use continuous variables and linear regression.

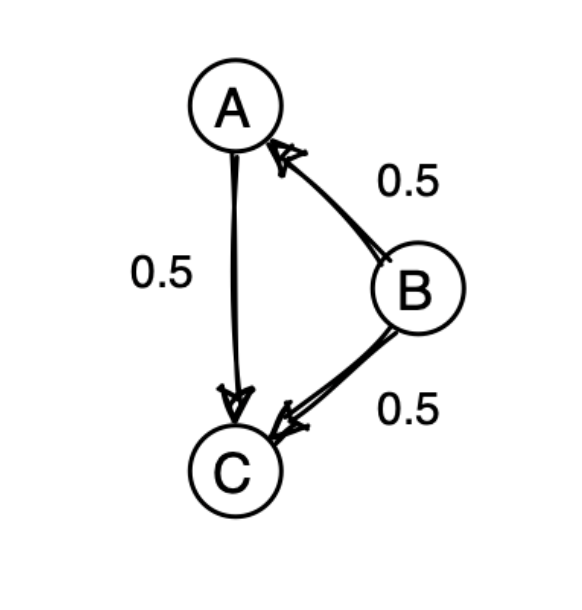

Let's imagine we are interested in the effect of a variable and an outcome variable . But there is a confounder . What does that do to our association?

We'll simulate a classic confounding relationship like this:

So is a confounder: it affects both the predictor and the outcome .

Simulating

We'll make this as simple as possible, by making every variable be normally distributed. And all the effect sizes will be 0.5 as indicated in the diagram.

Let's start now, simulating data on samples, say.

N = 1000

First, since has no edges coming into it, we can simulate it simply by randomly drawing from a normal distribution:

B = rnorm( N, mean = 0, sd = 1 )

Second, since has only one arrow into it (from ), we can simulate it as plus some noise. To keep things simple, we'll add 'just enough' noise so it has variance again:

A = 0.5 * B + rnorm( N, mean = 0, sd = sqrt(0.75) )

It turns out that adding something with variance is just the right thing here to make have variance again.

The reason for this is the property of the variance that, if and are independent, then

In our case since has variance , has variance . Therefore to make up to variance we need to add some independent 'noise' with variance . That's what the code above does.

If you want to see more detail on how these variance computations are carried out, see the 'extras' page on computing with (co)variances.

Finally, let's simulate . It ought to be made up of a contribution from , a contribution from , and some additional noise:

How much noise? Again, the contributions from and each have variance . But they also covary which has to be taken account of. The correct formula turns out to be

C = 0.5*A + 0.5*B + rnorm( N, mean = 0, sd = sqrt( 0.25 ))

Why is this? Again it uses the basic formula for adding variances:

Here is the covariance between and - a measure of their colinearity (after subtracting the mean).

This and other formula are derived on the computing with (co)variance page.

If you apply this in our example adding up a half of each of and , it works out as:

Since and both have variance , and since the covariance of and is 0.5 because of how we computed , this works out as 0.75. So to make have variance again we need to add independent noise with variance 0.25, which is what the line above does.

Let's check this all worked now by computing the covariance between variables.

simulated_data = tibble(

A = A,

B = B,

C = C

)

cov( simulated_data )

You should see something like this:

> cov( simulated_data )

A B C

A 1.0396708 0.5128317 0.7698858

B 0.5128317 1.0058647 0.7557131

C 0.7698858 0.7557131 1.0113318

Does this look right?

Testing for association

Testing without controlling for the confounder

Now let's fit our linear regression model of the outcome variable on :

fit1 = lm( C ~ A )

coeffs1 = summary(fit1)$coeff

print( coeffs1 )

What estimate do you get for the effect of on ? Does it capture the causal effect? Is it too high? Too low?

How confident is the regression in this estimate (e.g. how small is the standard error, or how low is the P-value?)

Testing after controlling for the confounder

The great thing about regression models (as opposed to to things like, say, T tests or 2x2 tables) is that they make it easy to control for confounders. Let's fit another linear regression fit that simultaneously fits both and as predictors:

fit2 = lm( C ~ A + B )

coeffs2 = summary(fit2)$coeff

print( coeffs2 )

What estimate do you get for the effect of on ? Does it capture the causal effect? Is it too high? Too low?

Is the estimate within a 95% interval of the true causal effect (i.e. of ?)

Congratulations! By this point you should understand the basic concepts behind confounding and how they link to regression estimates. To check your understanding, try this challenge question:

Suppose we go back and re-simulate the data but having a true (causal) effect size of zero between and .

Draw the causal diagram and explain what you think will happen to the estimates now.

Now go back and re-simulate this scenario to confirm your expectations.

- Your solution

- Hint 1

- Hint 2

- Hint 3

- Solution

Draw the causal diagram between , , and . What is the causal relationship between and ? What paths through the graph will be picked up by the association test between and ?

To simulate, you need to re-simulate according to the formula:

...and keep things so that has variance .

What variance of noise do you need to add to to do this?

You need to re-simulate , according to the formula:

so that has variance .

What variance of noise do you need to add to to do this?

Well according to the properties of the variance:

...and of course because that's how we simulated it.

So what variance does the noise need to have?

The causal diagram now looks something like this:

There is no causal relationship between and , but is a confounder - a variable that affects both and . You can expect that - if not controlled for - association between and will pick up the path through .

To confirm this, you need to re-simulate , according to the formula:

so that has variance . What variance of noise do you need to add to to do this?

Well according to the properties of the variance:

...and of course because that's how we simulated it.

So this means the noise should have variance 0.75 - the R code is:

C = 0.5 * B + rnorm( N, sd = sqrt(0.75) )

(This was of course just the same calculation we did for above!)